Last week brought news of a truly interesting advance in porting opioid production to yeast. This is pretty cool science, because it involves combining enzymes from several different organisms to produce a complex and valuable chemical, although no one has yet managed to integrate the whole synthetic pathway in microbes. It is also potentially pretty cool economics, because implementing opiate production in yeast should dramatically lower the price of a class of important pain medications to a point that developing countries might finally be able to afford.

Alongside the scientific article was a Commentary – with images of drug dens and home beer brewing – explicitly suggesting that high doses of morphine and other addictive narcotics would soon be brewed at home in the garage. The text advertised “Home-brew opiates” – wow, just like beer! The authors of the Commentary used this imagery to argue for immediate regulation of 1) yeast strains that can make opioids (even though no such strains exist yet), and 2) the DNA sequences that code for the opioid synthesis pathways. This is a step backward for biosecurity policy, by more than a decade, because the proposal embraces measures known to be counterproductive for security.

The wrong recipe.

I'll be very frank here – proposals like this are deep failures of the science policy enterprise. The logic that leads to “must regulate now!” is 1) methodologically flawed and 2) ignores data we have in hand about the impacts of restricting access to technology and markets. In what follows, I will deal in due course with both kinds of failures, as well as looking at the predilection to assume regulation and restriction should be the primary policy response to any perceived threat.

There are some reading this who will now jump to “Carlson is yet again saying that we should have no regulation; he wants wants everything to be available to anyone.” This is not my position, and never has been. Rather, I insist that our policies be grounded in data from the real world. And the real world data we have demonstrates that regulation and restriction often cause more harm than good. Moreover, harm is precisely the impact we should expect by restricting access to democratized biological technologies. What if even simple analyses suggests that proposed actions are likely to make things worse? What if the specific policy actions recommended in response to a threat have already been shown to exacerbate damage from the threat? That is precisely the case here. I am constantly confronted with people saying, "That's all very well and good, but what do you propose we do instead?" The answer is simple: I don't know. Maybe nothing. Maybe there isn't anything we can do. But for now, I just want us to not make things worse. In particular I want to make sure we don't screw up the emerging bioeconomy by building in perverse incentives for black markets, which would be the worst possible development for biosecurity.

Policy conversations at all levels regularly make these same mistakes, and the arguments are nearly uniform in structure. “Here is something we don't know about, or are uncertain about, and it might be bad – really, really bad – so we should most certainly prepare policy options to prevent the hypothetical worst!” Exclamation points are usually just implied throughout, but they are there nonetheless. The policy options almost always involve regulation and restriction of a technology or process that can be construed as threatening, usually with little or no consideration of what that threatening thing might plausibly grow into, nor of how similar regulatory efforts have fared historically.

This brings me to the set up. Several news pieces (e.g., the NYT, Buzzfeed) succinctly pointed out that the “home-brew” language was completely overblown and inflammatory, and that the Commentary largely ignored both the complicated rationale for producing opioids in yeast and the complicated benefits of doing so. The Economist managed to avoid getting caught up in discussing the Commentary, remaining mostly focussed on the science, while in the last paragraph touching on the larger market issues and potential future impacts of “home brew opium” to pull the economic rug out from under heroin cartels. (Maybe so. It's an interesting hypothesis, but I won't have much to say about it here.) Over at Biosecu.re, Piers Millet – formerly of the Biological Weapons Convention Implementation Support Unit – calmly responded to the Commentary by observing that, for policy inspiration, the authors look backward rather than forward, and that the science itself demonstrates the world we are entering requires developing completely new policy tools to deal with new technical and economic realities.

Stanford's Christina Smolke, who knows a thing or two about opioid production in yeast, observed in multiple news outlets that getting yeast to produce anything industrially at high yields is finicky to get going and then hard to maintain as a production process. It's relatively easy to produce trace amounts of lots of interesting things in microbes (ask any iGEM team); it is very hard and very expensive to scale up to produce interesting amounts of interesting things in microbes (ask any iGEM team). Note that we are swimming in data about how hard this is to do, which is an important part of this story. In addition to the many academic examples of challenges in scaling up production, the last ten years are littered with startups that failed at scale up. The next ten years, alas, will see many more.

Even with an engineered microbial strain in hand, it can be extraordinarily hard to make a microbe jump through the metabolic and fermentation hoops to produce interesting/useful quantities of a compound. And then transferring that process elsewhere is very frequently its own expensive and difficult effort. It is not true that you can just mail a strain and a recipe from one place to another and automatically get the same result. However, it is true that all this will get easier over time, and many people are working on reproducible process control for biological production.

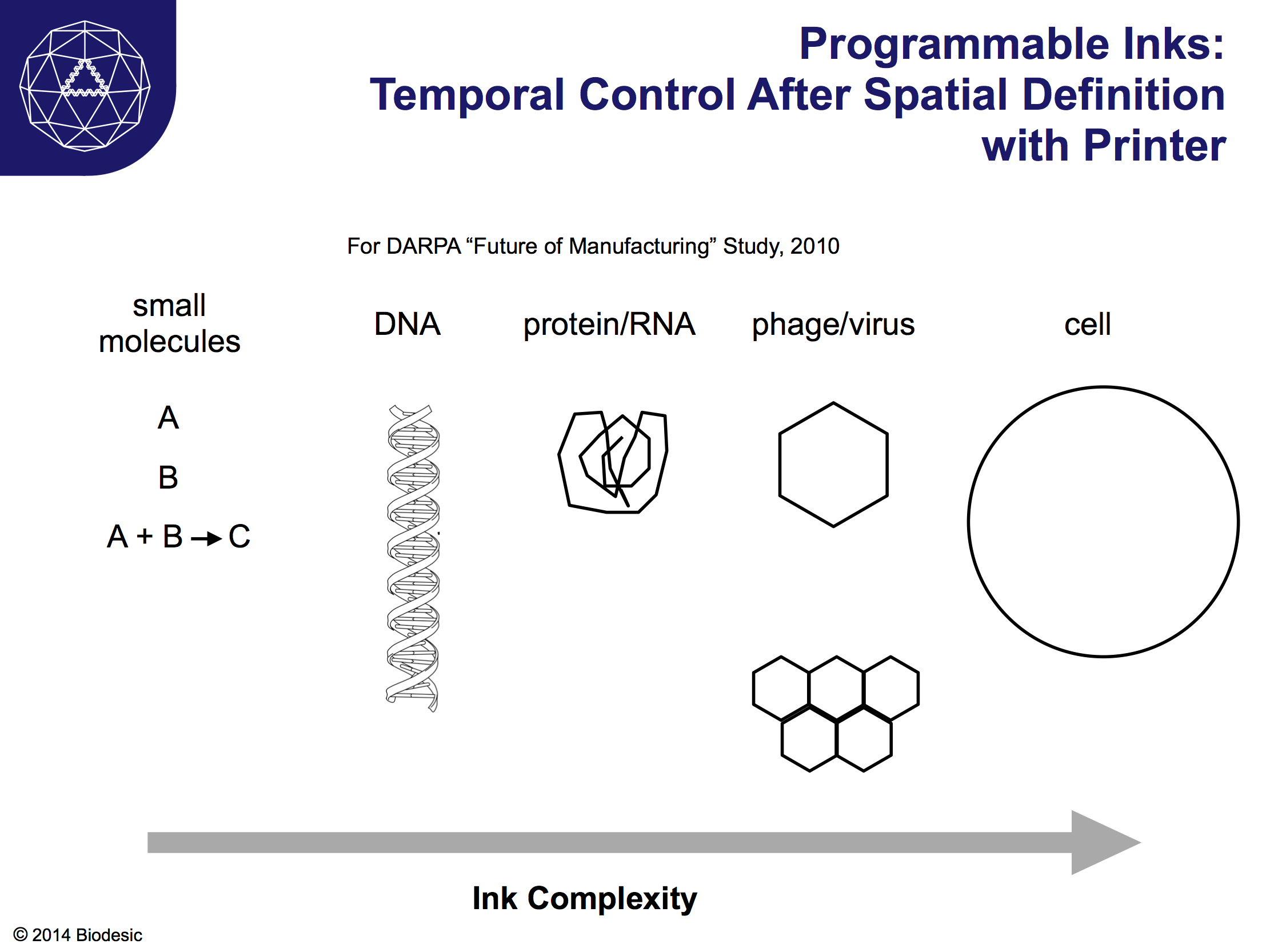

That future looks amazing. I've written many times about how the future of the economy looks like beer and cows – in other words, that our economy will inevitably be based on distributed biological manufacturing. But that is the future: i.e., not the present. Nor is it imminent. I truly wish it were imminent, but it is not. Whole industries exist to solve these problems, and much more money and effort will be spent before we get there. The economic drivers are huge. Some of the investments made by Bioeconomy Capital are, in fact, aimed at eventually facilitating distributed biological manufacturing. But, if nothing else, these investments have taught me just how much effort is required to reach that goal. If anybody out there has a credible plan to build the Cowborg or to microbrew chemicals and pharmaceuticals as suggested by the Commentary, I will be your first investor. (I said “credible”! Don't bother me otherwise.) But I think any sort of credible plan is years away. For the time being, the only thing we can expect to brew like beer is beer.

FBI Supervisory Special Agent Ed You makes great use of the “brewing bad” and “baking bad” memes, mentioned in the Commentary, in talking to students and professionals alike about the future of drug production. But this is in the context of taking personal responsibility for your own science and for speaking up when you see something dangerous. I've never heard Ed say anything about increasing surveillance and enforcement efforts as the way forward. In fact, in the Times piece, Ed specifically says, “We’ve learned that the top-down approach doesn’t work.” I can't say exactly why Ed chose that turn of phrase, but I can speculate that it is based 1) on his own experience as a professional bench molecular biologist, 2) the catastrophically bad impacts of the FBI's earlier arrests and prosecutions of scientists and artists for doing things that were legal, and 3) the official change in policy from the White House and National Security Council away from suppression and toward embracing and encouraging garage biology. The standing order at the FBI is now engagement. In fact, Ed You's arrival on the scene was coincident with any number of positive policy changes in DC, and I am happy to give him all the credit I can. Moreover, I completely agree with Ed and the Commentary authors that we should be discussing early on the implications of new technologies, an approach I have been advocating for 15 years. But I completely disagree with the authors that the current or future state of the technology serves as an indicator of the need to prepare some sort of regulatory response. We tried regulating fermentation once before; that didn't work out so well [1].

Badly baked regulatory policy.

So now we're caught up to about the middle of the Commentary. At this point, the story is like other such policy stories. “Assume hypothetical thing is inevitable: discuss and prepare regulation.” And like other such stories, here is where it runs off the rails with a non sequitur common in policy work. Even if the assumption of the thing's inevitability is correct (which is almost always debatable), the next step should be to assess the impact of the thing. Is it good, or is it bad? (By a particular definition of good and bad, of course, but never mind that for now.) Usually, this question is actually skipped and the thing is just assumed to be bad and in need of a policy remedy, but the assumption of badness, breaking or otherwise, isn't fatal for the analysis.

Let's say it looks bad – bad, bad, bad – and the goal of your policy is to try to either head it off or fix it. First you have to have some metric to judge how bad it is. How many people are addicted, or how many people die, or how is the crime rate affected? Just how bad is it breaking? Next – and this is the part the vast majority of policy exercises miss – you have to try to understand what happens in the absence of a policy change. What is the cost of doing nothing, of taking no remediating action? Call this the null hypothesis. Maybe there is even a benefit to doing nothing. But only now, after evaluating the null hypothesis, are you in a position to propose remedies, because only now you have a metric to compare costs and benefits. If you leap directly to “the impacts of doing nothing are terrible, so we must do something, anything, because otherwise we are doing nothing”, then you have already lost. To be sure, policy makers and politicians feel that their job is to do something, to take action, and that if they are doing nothing then they aren't doing their jobs. That is just a recipe for bad policy. Without the null hypothesis, your policy development is a waste of time and, potentially, could make matters worse. This happens time and time again. Prohibition, for example, was exactly this sort of failure, and cost much more than it benefited, which is why it was considered a failure [2].

We keep making the same mistake. We have plenty of data and reporting, courtesy of the DEA, that the ongoing crackdown on methamphetamine production has created bigger and blacker markets, as well as mayhem and violence in Mexico, all without much impact on domestic drug use. Here is the DEA Statistics & Facts page – have a look and then make up your own mind.

I started writing about the potential negative impacts of restricting access to biological technologies in 2003 (PDF), including the likely emergence of black markets in the event of overregulation. I looked around for any data I could find on the impacts of regulating democratized technologies. In particular, I happened upon the DEA's first reporting of the impacts of the then newly instituted crackdown on domestic methamphetamine production and distribution. Even in 2003, the DEA was already observing that it had created bigger, blacker markets – that are by definition harder to surveil and disrupt – without impacting meth use. The same story has played out similarly in cocaine production and distribution, and more recently in the markets for “bath salts”, aka “legal highs”.

That is, we have multiple, clear demonstrations that, rather than improving the world, restricting access to distributed production can instead cause harm. But, really, when has this ever worked? And why do people think going down the same path in the future will lead anywhere else? I am still looking for data – any data at all – that supports the assertion that regulating biological technologies will have any different result. If you have such data, bring it. Let's see it. In that absence of that data, policy proposals that lead with regulation and restriction are doomed to repeat the failures of the past. It has always seemed to me like a terrible idea to transfer such policies over to biosecurity. Yet that is exactly what the Commentary proposes.

Brewing black markets.

The fundamental problem with the approach advocated in the Commentary is that security policies, unlike beer brewing, do not work equally well across all technical and economic scales. What works in one context will not work in another. Nuclear weapons can be secured by guns, gates, and guards because they are expensive to build and the raw materials are hard to come by, so heavy touch regulation works just fine. There are some industries – as it happens, beer brewing – where only light touch regulation works. In the U.S., we tried heavy touch regulation in the form of Prohibition, and it failed miserably, creating many more problems than it solved. There are other industries, for example DNA and gene synthesis, in which even light touch regulations are a bad idea. Indeed, light touch regulation of has already created the problem it was supposed to prevent, namely the existence of DNA synthesis providers that 1) intentionally do not screen their orders and 2) ship to countries and customers that are on unofficial black lists.

For those who don't know this story: In early 2013, the International Council for the Life Sciences (ICLS) convened a meeting in Hong Kong to discuss "Codes of Conduct" for the DNA synthesis industry, namely screening orders and paying attention to who is doing the ordering. According to various codes and guidelines promulgated by industry associations and the NIH, DNA synthesis providers are supposed to reject orders that are similar to sequences that code for pathogens, or genes from pathogens, and it is suggested that they do not ship DNA to certain countries or customers (the unofficial black list). Here is a PDF of the meeting report; be sure to read through Appendix A.

The report is fairly anodyne in describing what emerged in discussions. But people who attended have since described in public the Chinese DNA synthesis market as follows. There are 3 tiers of DNA providers. The first tier is populated with companies that comply with the various guidelines and codes promulgated internationally because this tier serves international markets. There is a second tier that appears to similarly comply, because while they serve primarily the large internal market these companies have aspirations of also serving the international market. There is a third tier that exists specifically to serve orders from customers seeking ways around the guidelines and codes. (One company in this tier was described to me as a "DNA shanty", with the employees living over the lab.) Thus the relatively light touch guidelines (which are not laws) have directly incentivized exactly the behavior they were supposed to prevent. This is not a black market, per se, and cannot be accurate described as illegal, so let's call it a "grey market".

I should say here that this is entirely consistent with my understanding of biotech in China. In 2010, I attended a warm up meeting for the last round of BWC negotiations. After that meeting, I chatted with one of the Chinese representatives present, hoping to gain a little bit of insight into the size of the Chinese bioeconomy and the state of the industry. My query was met with frank acknowledgment that the Chinese government isn't able to keep track of the industry, does't know how many companies are active, or how many employees they have, or what they are up to, and so doesn't hold out much hope of controlling the industry. I covered this a bit in my 2012 Biodefense Net Assessment report for DHS. (If anyone has any new insight into the Chinese biotech industry, I am all ears.) Not that the U.S. or Europe is any better in this regard, as our mechanisms for tracking the biotech industry are completely dysfunctional, too. There could very well be DNA synthesis providers operating elsewhere that don't comply with the recommended codes of conduct: we have no real means of broadly surveying for this behavior. There are no physical means either to track it remotely or to control it.

I am a little bit sensitive about the apparent emergence of the DNA synthesis grey market, because I warned for years in print and in person that DNA screening would create exactly this outcome. I was condescendingly told on many occasions that it was foolish to imagine a black market for DNA. And then we have to do something, right? But it was never very complicated to think this through. DNA is cheap, and getting cheaper. You need this cheap DNA as code to build more complicated, more valuable things. Ergo, restrictions on DNA synthesis will incentivize people to seek, and to provide, DNA outside any control mechanism. The logic is pretty straightforward, and denying it is simply willful self-deception. Regulation of DNA synthesis will never work. In the vernacular of the day: because economics. To make it even simpler: because humans.

So the idea that people are still suggesting proscription of certain DNA sequences is a viable route to security just rankles. And it is demonstrably counterproductive. The restrictions incentivize the bad behavior they are supposed to prevent, probably much earlier than might have happened otherwise. The take home message here is that not all industries are the same, because not all technologies are the same, and that our policy approaches should take into account these differences rather than papering over them. In particular, restricting access to information in our modern economy is a losing game.

Where do we go from here?

We are still at the beginning of biotech. This is the most important time to get it right. This is the most important time not to screw up and make things worse. And it is important that we are at the beginning, because things are not yet screwed up.

Conversely, we are well down the road in developing and deploying drug policies, with much damage done. To be sure, despite the accumulated and ongoing costs, you have to acknowledge that it is not at all clear that suddenly legalizing drugs such as meth or cocaine would be a positive step. I am not in any way making that argument. But it is abundantly clear that drug enforcement activities have created the world we live in today. Was there an alternative? If the DEA had been able to do cost/benefit analysis of the impacts of its actions – that is, predict the emergence of DTOs and their role in production, trafficking, and violence – would the policy response 15 years ago have been any different? If Nixon had more thoughtfully considered even what was known 50 years about about the impacts of proscription, would he have launched the war on drugs? That is a hard question, because drug policy is clearly driven more by stories and personal politics than by facts. I am inclined to think the present drug policy mess was inevitable. Even with the DEA's self-diagnosed role in creating and sustaining DTOs, the national conversation is still largely dominated by “the war on drugs”. And thus the first reaction to the prospect of microbial narcotics production is to employ strategies and tactics that have already failed elsewhere. I would hate to think we are in for a war on microbes, because that is doomed to failure.

But we haven't yet made all those mistakes with biological technologies. I continue to hope that, if nothing else, we will avoid making things worse by rejecting policies we already know won't work.

Notes:

[1] Pause here to note that even this early in the set up, the Commentary conflates via words and images the use of yeast in home brew narcotics with centralized brewing of narcotics by cartels. These are two quite different, and are perhaps mutually exclusive, technoeconomic futures. Drug cartels very clearly have the resources to develop technology. Depending on whether you listen to the U.S. Navy or the U.S. Coast Guard, either 30% or 80% of the cocaine delivered to the U.S. is transported at some point in semisubmersible cargo vessels or in fully submersible cargo submarines. These 'smugglerines', if you will, are the result of specific technology development efforts directly incentivized by governmental interdiction efforts. Similarly, if cartels decide that developing biological technologies suits their business needs, they are likely to do so. And cartels certainly have incentives to develop opioid-producing yeast, because fermentation usually lowers the cost of goods between 50% and 90% compared to production in plants. Again, cartels have the resources, and they aren't stupid. If cartels do develop these yeast strains, for competitive reasons they certainly won't want anyone else to have them. Home brew narcotics would further undermine their monopoly.

[2] Prohibition was obviously the result of a complex socio-political situation, just as was its repeal. If you want a light touch look at the interaction of the teetotaler movement, the suffragette movement, and the utility of Prohibition in continued repression of freed slaves after the Civil War, check out Ken Burns's “Prohibition” on Netflix. But after all that, it was still a dismal failure that created more problems than it solved. Oh, and Prohibition didn't accomplish its intended aims. Anheuser-Busch thrived during those years. Its best selling products at the time were yeast and kettles (see William Knoedleseder's Bitter Brew)...